Either you know or you don’t

Monday 12 November 2007 at 7:12 pm 9 comments

If evaluation was limited to True or False test then knowledge will only be limited to two stages: does know or does not know, complete knowledge or total ignorance.

There is a feeling that it should not be that simple. For instance what about those “tip of the tongue (TOT)” experience: knowledge or ignorance? On the other side and as Bertrant Russel stated in 1912: “Is there any knowledge in the world which is so certain that no reasonable man could doubt it? ” (Russell, B. (1988) The Problems of Philosophy, Prometheus Books. Page 1).

Does complete knowledge exists? When starting to learn something do human start from total ignorance?

Darwin Hunt (Human Self-Assessment : Theory and Application to Learning and Testing in Leclercq, D. & Bruno, J. (ed.) Item Banking : Interactive Testing and Self-Assessment, Berlin: Springer Verlag, 1993, F 112, 177-189) suggested to distinguish between three types of knowledge situations in which a person can be in relation to a piece of content:

- misinformed,

- uninformed,

- informed.

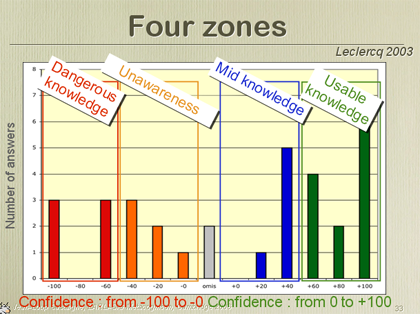

Jans and Leclercq (Mesurer l’effet de l’apprentissage à l’aide de l’analyse spectrale des performances in Depover, C. & Noël, B. (ed.) L’évaluation des compétences et des processus cognitifs. Modèles pratiques et contextes, Bruxelles : De Boeck Université, 1999, 303-317) related these three levels with confidence degrees (or degree of certainty / certitude). Every answer is associated with a percentage of confidence in the answer. An answer wih the lowest confidence will be given with a certitude of 0%. The highest confidence will be 100%. Answer still can be right or wrong. But 4 stages can be made:

- Wrong answer with high certitude or dangerous knowledge;

- Wrong answer with a low certitude or unawareness;

- Right answer with a low certitude or mid knowledge;

- Right answer with a high certitude or usable knowledge.

In their original paper Jans and Leclercq used a logarythmic scale for students to chose their certitude from. They defined 7 stages defined by different value of the given certitude of the answer

- right answer with certitude from 85 to 100%: perfect knowledge

- right answer with certitude from 15% to 85%: partial knowledge

- right answer with certitude from 0% to 15%: doubtful knowledge

- no answer: ignorance

- wrong answer with certitude from 0% to 15%: admitted unawareness;

- wrong answer with certitude from 15% to 85%: confusion;

- wrong answer with certitude from 85 to 100%: ignored unawareness.

It should be up to the education team and based upon educational objectives to fix the limite between a high level of confidence and a low level of confidence. A medical student in surgery may need right answers with certitude of 100% to be considered as usable knowledge, all other right answer falling into the mid knowledge class. For a person taking a test for a driving licence the limit for usable knowledge might be over 50%, all right answer below 50% falling into the mid knowledge class. Of course if using True / False questions a certitude below 50% has no meaning because the odds finding randomly the right answer already are 50%.

Entry filed under: education, evaluation, metacognition.

Definition of confidence One way to use degrees of certitude

9 Comments Add your own

Leave a comment Cancel reply

Trackback this post | Subscribe to the comments via RSS Feed

1. jeanloupcastaigne | Tuesday 13 November 2007 at 8:07 pm

jeanloupcastaigne | Tuesday 13 November 2007 at 8:07 pm

Well reading my own post I realize I should have added some comments about the fact that knowledge is not 0 or 1 but most of the time somewhere in between. 0 might be total ignorance, like if you asked me about bondardy element method I’m 100% sure about one thing: I don’t have a clue what it means. So I have a very good metacognitive judgement about my knowledge. But I still don’t know what it is. Something can be worst that total ignorance: having a strong confidence in knowing something and being wrong. So knowledge can have negative values. What about that ?

2. Patti | Thursday 15 November 2007 at 12:31 am

Patti | Thursday 15 November 2007 at 12:31 am

Yes, having strong confidence in one’s knowledge and yet being incorrect is detrimental to the learning process and should have a negative impact on one’s assessment score. Conversely, being correctly confident about something should be rewarded or given the highest points possible on an assessment question.

In fact, for those that give assessments, the “high confidence/no knowledge” perspective within the learner population is one of the most critical factors to identify. And is something that is not easily done with regular assessment models.

Who wants his/her pilot to feel confident and safe to perform a flight maneuver, when it is completely disastrous? I think I’d rather walk, thank you.

The question models used in Confidence- or Certainty-Based Marking (CBM) assessments are an excellent way to obtain information on the learner’s perspective. CBM benefits both the learner and teacher with a deeper understanding of the learner’s perspective and knowledge level, allowing each to more closely address the needs of the learner.

Thank you for your post and your discussions on CBM. I find them very interesting.

3. jeanloupcastaigne | Thursday 15 November 2007 at 11:57 am

jeanloupcastaigne | Thursday 15 November 2007 at 11:57 am

Hi Patti

Thanks a lot for your comments. After using CBM in summative and certificative testing for 5 years I always had problems giving value to the chosen certitude to an answer. Interviewing students I often had comments like “I was sure but I didn’t bet 100% because if wrong then I do not want to loose point”. So he wasn’t that sure after all.

Another point is that I wasn’t using true/false question but MCQ with 4 to 5 propositions out of only one was correct. On top of those 4-5 propositions for every MCQ there are always 4 other possible solutions: none of the propositions is correct; all propositions are corrects, data are missing to be able to answer, and the question is absurd. I will detail that in a fore coming post. Some students argued that they never choose 80% or 100% certitude because they were not confident that they eluded the possibility of one of those 4 other propositions.

I also made a survey out of 349 students in which 52% admitted that the were using one specific strategy, some always, some time to time, to choose the degree of certainty given with an answer instead of giving an honest expression of how reliable the chosen answer was. See that post for more but still incomplete information.

From that time I have a new hypothesis: when using CBM in certificative test linking points to the expression of certitude will bias the honest expression of certitude. But of course this does not mean that one should not use CBM in certificative evaluation, on the contrary. It only means that doing so one should be very cautious giving interpretation of the certitude associated with answers. I mean some conclusion like “a lack of confidence because certitude 100% was never used”.

4. Tony Gardner-Medwin | Thursday 15 November 2007 at 11:19 pm

Tony Gardner-Medwin | Thursday 15 November 2007 at 11:19 pm

Don’t you think that an essential (and perfectly practical) part of CBM is to have a transparent mark scheme that always motivates the student to express their true confidence honestly. In my view the main value of CBM comes from encouraging students to think more carefully about things that bear on the question. This reflection may either lead to justifications for belief that the chosen answer will be marked correct, or reasons for doubt. Either way the student must expect to gain by expressing their high or low degree of certainty. This is what statisticians call a ‘proper’ or ‘motivating’ reward scheme, and is in my view the essence of CBM if it is to encourage deeper learning. It’s one of the critical points I have made in publications based on our CBM installation at UCL (www.ucl.ac.uk/lapt). Tony G-M

5. Patti | Friday 16 November 2007 at 6:50 pm

Patti | Friday 16 November 2007 at 6:50 pm

Hi Jean-Loup,

Thanks for your response. I look forward to reading more about how you collect data from your MCQs, as well as understanding your efforts with CBM.

Is your goal to determine the exact level of confidence per question per student? If so, it seems as though it would take quite a bit of effort to collect this data, especially with so many options for the student to select per MCQ. Are you looking to determine more of the psychology behind the student’s thought process? I would very much like to understand your thoughts or intentions on what is done with the information you collect.

Thank you for your patience with my questions. I am very interested in the CBM question model and your perspective with regards to its use.

Patti

6. jeanloupcastaigne | Sunday 18 November 2007 at 3:16 pm

jeanloupcastaigne | Sunday 18 November 2007 at 3:16 pm

Prof. Gardner-Medwin,

Thanks a lot for your comment. Yes I share your point of view that it is mandatory to have a transparent mark scheme. But the mark scheme I was using did not “motivate student to express honestly their true confidence” enough for me. Over the years I’ve pointed out that more and more students were choosing mainly one certitude: 70-85%, not because it was 70-85% but because it was the certitude associated with a highest score if correct that will not remove points if failed.

So I gave students explanations that it wasn’t the most rewarding way to behave. I explained them using graphical representations of expected payment for the estimated probability being correct, used by you and Prof. Leclercq and based on the “Theory of games and economic behaviour” model created by J. Von Neumann and O. Morgenstern in 1944. But they did not behave in a different way. I made a survey asking them if they were indeed using that strategy. Out of 349 answers 42% of the students confessed they were most of the time using that specific strategy. Not speaking of other strategies. Of course some student were honestly expressing their confidence. But it was impossible to point out who was honest and who was using a strategy. I was far from “encouraging students to think more carefully about things that bear on the question” as you mentioned.

I created a new rewarding system based on the “good use of certitude degree” over the whole test instead of question by question. And students used different strategies. But my target was still the same as yours: “to encourage deeper learning.” So I’ve used a system I describe in this post. It still need some improvement but I reached my target.

Maybe you do not have this problem in UCL? Do you thing you manage to achieve deeper learning because using a different rewarding system with 3 certitude to choose from instead of a much more complicated system with 6 certitude described in this post?

I like the explanations given with your tariff: a) student is 80 to 100% sure and chooses to risk loosing twice the gain if failing; b) student is very unsure (50 to 67%) and chooses not to loose points if wrong c) if in between the student chooses C2. The point I miss is how much is needed to pass the test?

In my point of view the most difficult part is self evaluating between being very unsure and absolutely sure. Maybe the first step students should take is what you do: making the difference between those 3 levels of knowledge. But with students in their fifth year at the university I think they should be able to use more levels between absolutely sure and very unsure. Maybe they lack of training. Maybe we should use the UCL system at the beginning of the university. Then we could use a more complex system. Hypothetically would you consider using with student in their second or third year a more complex system exploring deeper what is in between absolutely sure and very unsure? Do you thing it might help them to achieve deeper learning?

7. jeanloupcastaigne | Sunday 18 November 2007 at 3:31 pm

jeanloupcastaigne | Sunday 18 November 2007 at 3:31 pm

Hi Patti,

Thanks for the follow up. You asked me “Is your goal to determine the exact level of confidence per question per student?”

My goal is to help students to gain “better” knowledge, meaning being confident in their knowledge and able to doubt adequately. To achieve this I do need a honest expression of confidence for every question. That was the first challenge as you can read in my reply to Prof. Gardner-Medwin.

Data are easily collected in our university as we can read special sheets students use to answer questions. This can also be achieved using a computer.

A feedback per question will be obsolete in my opinion. That is why I provide students different levels of feedback. First their score, then the number of questions in each category (dangerous knowledge, unawareness, mid knowledge and usable knowledge, see this post. It is then up to them to identify, for instance, within dangerous knowledge what answer was wrong and associated with a certitude of 100%. And correct their knowledge.

You also asked: “Are you looking to determine more of the psychology behind the student’s thought process?”. Within the process I see my self more as giving tools to the student to become a better and deeper learner.

8. Idetrorce | Saturday 15 December 2007 at 3:19 pm

Idetrorce | Saturday 15 December 2007 at 3:19 pm

very interesting, but I don’t agree with you

Idetrorce

9. jeanloupcastaigne | Saturday 15 December 2007 at 7:16 pm

jeanloupcastaigne | Saturday 15 December 2007 at 7:16 pm

Hi Idetorce,

Thank you for leaving a comment. Can you please explain on what you do not agree?